How Apple’s controversial study claiming AI models can’t truly reason sparked a fierce academic battle, exposed methodological flaws, and revealed the messy intersection of corporate strategy and scientific research.

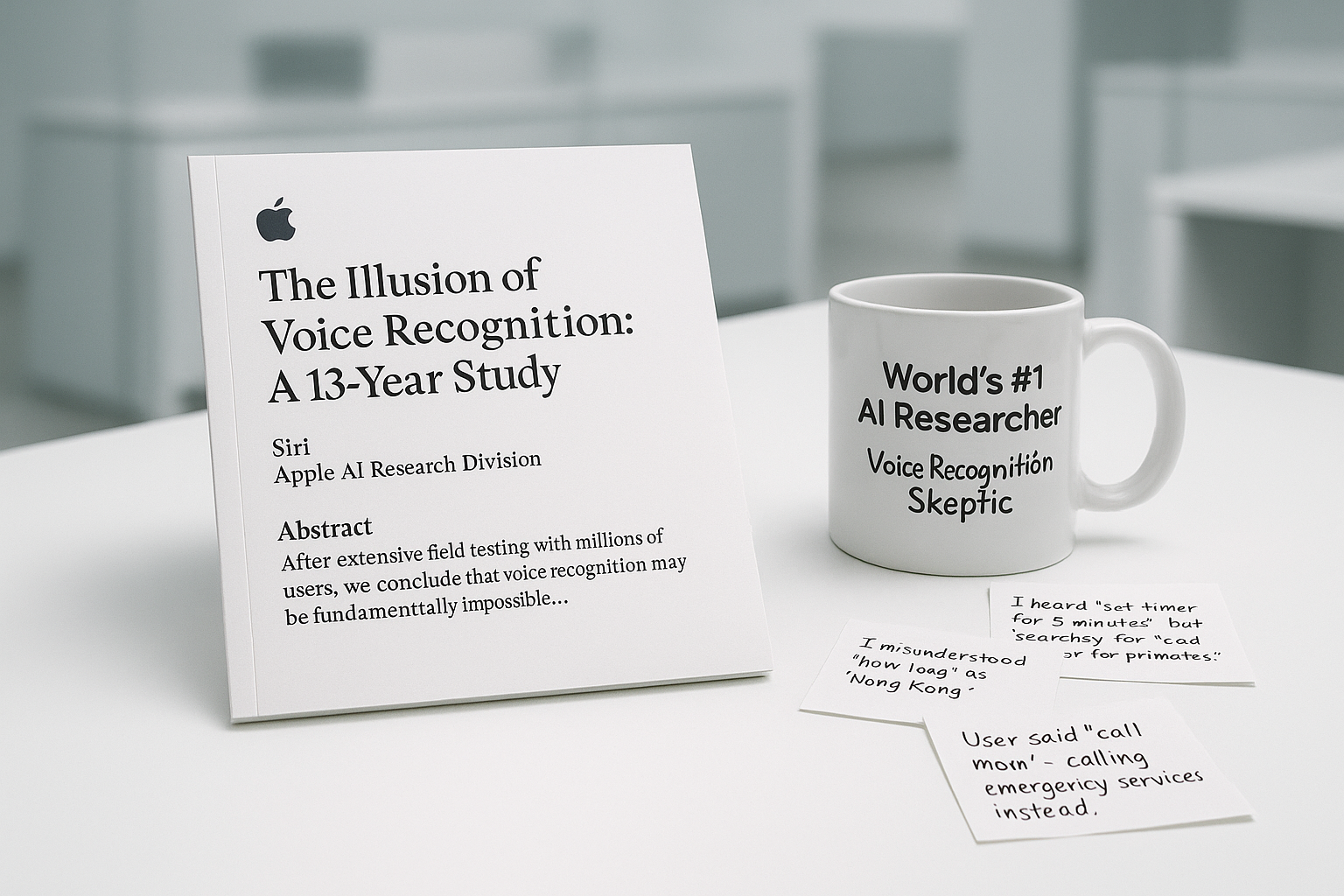

The Illusion of Apple’s AI Research