The Illusion of Apple’s AI Research

Table of Contents

Bottom line up front: Apple’s “Illusion of Thinking” paper claimed that AI reasoning models catastrophically fail at complex tasks, but methodological flaws and suspicious timing suggest the study reveals more about corporate strategy than AI limitations.

On June 6, 2025, Apple’s research team led by Mehrdad Farajtabar dropped a bombshell: a study claiming that state-of-the-art AI reasoning models experience “complete accuracy collapse” when faced with complex puzzles. The paper, titled “The Illusion of Thinking,” tested models like OpenAI’s o1/o3, DeepSeek-R1, and Claude 3.7 Sonnet on classic logic problems, concluding that what appears to be reasoning is actually sophisticated pattern matching.

But Apple’s timing was suspect. The paper appeared just days before WWDC 2025, where the company was expected to showcase limited AI advancement compared to competitors. What followed was one of the most contentious academic controversies in recent AI history.

The LinkedIn Hype Train Derails#

Before technical experts could properly evaluate Apple’s methodology, business influencers on LinkedIn had already picked sides. The speed of these reactions reveals how modern tech controversies unfold in real-time, with strategic narratives racing ahead of scientific rigor.

Dion Wiggins characterized Apple’s research as corporate manipulation, arguing that Apple had “hijacked the message, erased the messengers, and timed it for applause.” He contended that Apple couldn’t lead on innovation, so it tried to steal relevance by reframing the entire AI conversation.

Nicolas Ahar argued that Apple had “lit the fuse” on Silicon Valley’s “$100 billion AI reasoning bubble,” characterizing it as a “classic Apple move” where the company watches competitors burn through venture capital before taking advantage of the situation.

Michael Kisilenko framed the sentiment as “If you can’t beat them, debunk them,” describing the research as “sophisticated damage control from a company that bet wrong on AI.”

Meanwhile, Saanya Ojha noted the irony of the situation, observing that the paper had “strong ‘guy on the couch yelling at Olympic athletes’ energy” and pointing out that Apple was critiquing reasoning approaches while having “publicly released no foundation model” and being “widely seen as lagging in generative AI.”

Gary Marcus Claims Victory#

Gary Marcus, the longtime AI skeptic, saw vindication. On June 7, he published “A knockout blow for LLMs?” arguing that Apple’s findings validated his decades of criticism about neural networks dating back to 1998.

Marcus highlighted what he saw as devastating details: LRMs failed on Tower of Hanoi with just 8 discs (255 moves), well within token limits. Models couldn’t execute basic algorithms even when explicitly provided. These were problems that first-year computer science students could solve, yet billion-dollar AI systems collapsed completely.

Marcus revealed private communications with Apple researchers, including co-author Iman Mirzadeh, who confirmed that models failed even when given solution algorithms. If these systems couldn’t solve problems that Herbert Simon tackled with 1950s technology, Marcus contended, this raised serious questions about the prospects for artificial general intelligence.

The Devastating Technical Rebuttal#

Then came the academic equivalent of a precision strike. On June 13, Alex Lawsen from Open Philanthropy published his rebuttal: “The Illusion of the Illusion of Thinking,” co-authored with Anthropic’s Claude Opus.

Lawsen’s credentials made his critique particularly damaging. A Senior Program Associate at Open Philanthropy focusing on AI risks, he holds a Master of Physics from Oxford and has deep experience in AI safety research. This wasn’t a corporate hit job—it was rigorous academic analysis.

Lawsen identified three critical flaws that undermined Apple’s headline-grabbing conclusions:

Token Budget Deception: Models were hitting computational limits precisely when Apple claimed they were “collapsing.” According to Lawsen’s analysis, Claude would indicate when approaching token limits, producing outputs such as statements about stopping to save tokens when solving Tower of Hanoi problems. Apple’s automated evaluation couldn’t distinguish between reasoning failure and practical output constraints.

Impossible Puzzle Problem: Most damaging of all, Apple’s River Crossing experiments included mathematically unsolvable instances for puzzles with 6+ actor/agent pairs and boat capacity of 3. Models were penalized for correctly recognizing these impossible problems—what Lawsen characterized as equivalent to penalizing a SAT solver for correctly identifying an unsatisfiable formula.

Evaluation Script Bias: Apple’s system judged models solely on complete, enumerated move lists, unfairly classifying partial solutions as total failures even when the reasoning process was sound.

Lawsen demonstrated the flaw by asking models to generate recursive solutions instead of exhaustive move lists. Claude, Gemini, and OpenAI’s o3 successfully produced algorithmically correct solutions for 15-disk Hanoi problems—far beyond the complexity where Apple reported zero success.

Apple’s Conspicuous Silence#

Apple has remained notably quiet about the methodological criticisms. The company issued no comprehensive official statements addressing Lawsen’s devastating technical analysis or other researchers’ concerns. Apple’s silence on the methodological criticisms was particularly notable given the company’s track record. While Apple questioned whether AI models could truly “think,” many users were still wondering whether Siri could truly “listen.”

The only acknowledgment came indirectly at WWDC 2025, where Apple executives admitted to significant delays in AI development. Craig Federighi and Greg Joswiak stated that features “needed more time to meet our quality standards” and didn’t “work reliably enough to be an Apple product.” The AI-powered Siri upgrade was pushed to 2026.

Industry analysts described the event as showing “steady but slow progress” and being “largely unexciting.” The limited AI announcements came amid intense competition from Google’s I/O conference, which showcased massive new AI features.

The Broader Pattern of Questionable Research#

The controversy becomes more troubling when viewed alongside Apple’s research history. The same team led by Mehrdad Farajtabar previously published the GSM-Symbolic paper, which faced similar methodological criticisms but was still accepted at major conferences despite identified flaws.

Researcher Alan Perotti noted that Apple’s latest paper was “underwhelming” and drew concerning parallels to the team’s previous questionable work. This pattern raises serious questions about peer review standards for corporate AI research.

Meanwhile, Apple has traditionally lagged behind competitors like Google, Microsoft, and OpenAI in AI development, focusing instead on privacy-preserving, on-device processing rather than cloud-based solutions.

The Academic Pile-On#

As technical experts examined Apple’s methodology, the scientific community’s response grew increasingly harsh. Conor Grennan expressed frustration after reading Lawsen’s rebuttal, noting his irritation with Apple and stating that the study had been proven seriously flawed.

Sergio Richter published a critique titled “The Illusion of Thinking (Apple, 2025) — A Masterclass in Overclaiming,” arguing that Apple proved nothing and characterizing the research as “a branding heist” rather than genuine science. He noted the irony of Apple criticizing other models while deploying them directly into Apple Intelligence.

The research community began viewing Apple’s study as an attack on legitimate scientific work, particularly concerned about the invalidation of prior research without proper justification and the failure to invite external peer review from competitive organizations.

What This Reveals About AI Research#

The Apple controversy exposes uncomfortable truths about the intersection of corporate interests and scientific research in the AI field. When a company’s competitive position influences its research conclusions, can we trust the science?

The speed with which business influencers formed strong opinions—before technical experts could evaluate the methodology—reveals how modern tech controversies unfold across social media. Strategic narratives race ahead of scientific rigor, with business implications driving the conversation.

This matters because the stakes are enormous. If Apple’s research had been sound, it would have fundamentally challenged assumptions about AI capabilities and potentially influenced billions in investment decisions. Instead, the methodological flaws suggest the study was more about corporate positioning than scientific advancement.

The Lasting Damage#

While subsequent analysis largely debunked Apple’s claims, the initial narrative spread faster than the corrections. YouTube channels titled their coverage “Apple Just SHOCKED Everyone: AI IS FAKE!?” generating millions of views before the technical rebuttals emerged.

The controversy highlights the need for stronger norms around corporate AI research: independent peer review requirements, clearer disclosure of conflicts of interest, and more rigorous standards for experimental design in capability assessment.

Perhaps most importantly, it demonstrates that extraordinary claims about AI limitations, like extraordinary claims about AI capabilities, require extraordinary evidence—something Apple’s study failed to provide.

The Real Illusion#

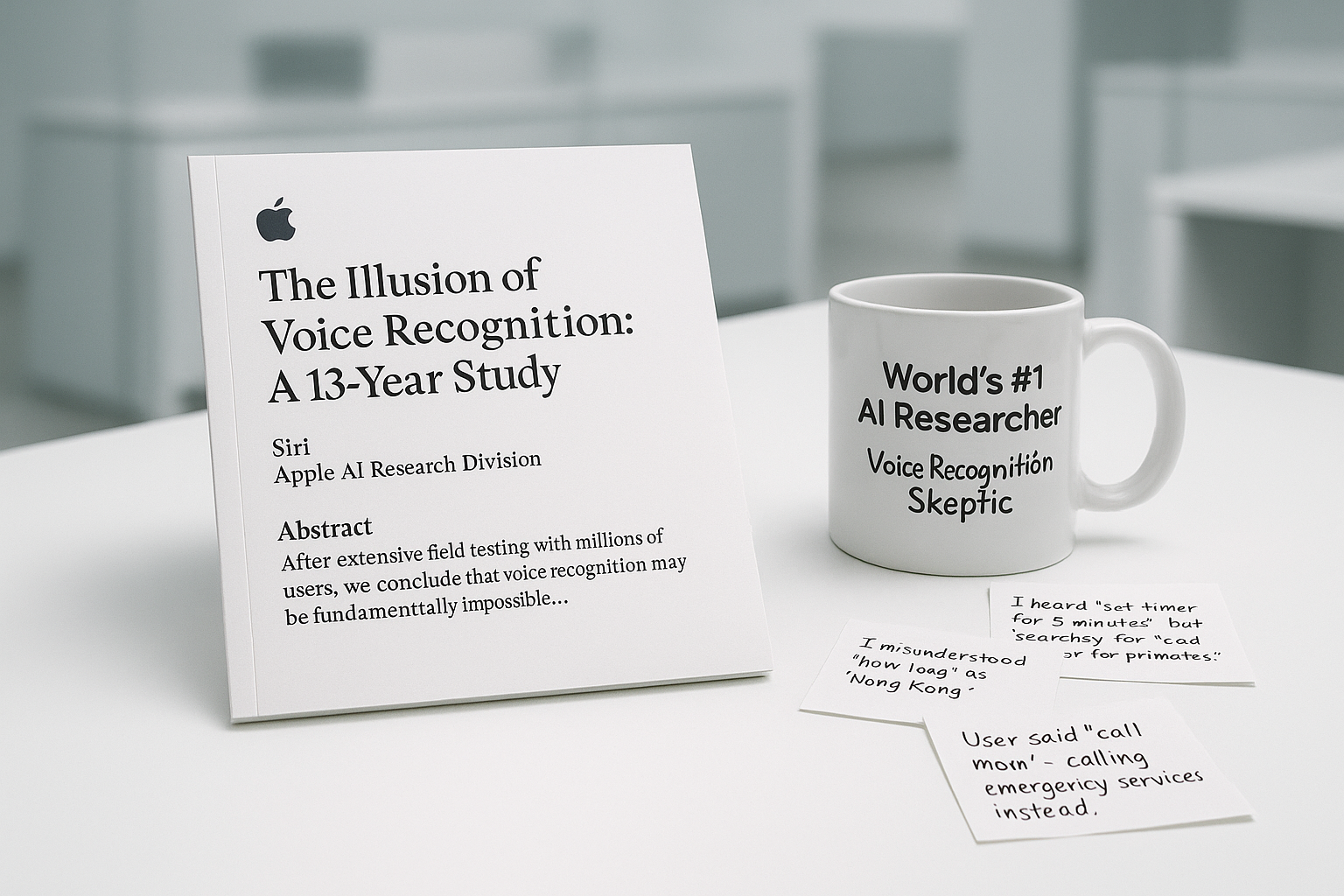

The true illusion wasn’t in AI thinking—it was in thinking that Apple’s research represented objective science rather than strategic positioning. The irony wasn’t lost on observers that Apple—whose Siri still struggles to understand basic requests after 13 years—was now positioning itself as the authority on AI reasoning capabilities. The company couldn’t lead on AI innovation, so it attempted to lead the conversation by undermining competitors’ achievements.

As one LinkedIn observer noted, Apple didn’t discover new limitations in AI reasoning—they repackaged existing criticisms, timed them strategically, and presented them as breakthrough research. The real breakthrough was in corporate messaging, not scientific understanding.

The field will likely see more such controversies as competitive pressures intensify. But if this episode leads to better standards for AI evaluation and more transparency in corporate research motivations, perhaps some good will emerge from this academic battlefield.

Key takeaway: Apple’s study said more about corporate strategy than AI limitations, serving as a cautionary tale about distinguishing genuine scientific inquiry from weaponized research in the high-stakes AI competition.